We’re all in a hurry, so here’s the TL;DR. My aim with this is essay is threefold:

- To undermine Facebook’s idealistic appeals to free speech, which it routinely deploys to absolve itself of responsibility for just about everything.

- To highlight the fundamental and recurring weaknesses in Mark Zuckerberg’s logic and leadership, and why they should scare us.

- To warn of the murky outlook for Facebook’s core product — and why that should scare us even more.

The point is not that my dinky lunge into political activism would’ve changed the trajectory of the election (it probably would’ve flopped on its own accord). It’s that I tried to engage in our nation’s political discourse during a pivotal moment in its history, and Mark Zuckerberg stole my mojo.

So now I am trolling him, as any patriot would.

All of this is true.

Part I: The Bedwetter

When I was little I pissed the bed.

This was normal until it wasn’t, so eventually they strapped a little blue box next to my head with jellyfish electrodes strung to my pajamma crotch. The blue box wailed whenever I fell into a deep enough slumber to heed nature's call. It explains a lot.

Many years later I would learn the extent of this trauma — thanks to its repeated and sadomasochistic triggering by the political podcast Keeping it 1600. The show featured several former Obama speechwriters who, amid biting commentary and self-indulgent fart sniffing, regularly chastised listeners horrified by the looming threat of a Trump presidency — dubbing us “bedwetters”.

“They’re probably right,” I thought, remembering damper times. But then the Comey letter hit and the blue box started wailing, until it drove me crazy enough to act.

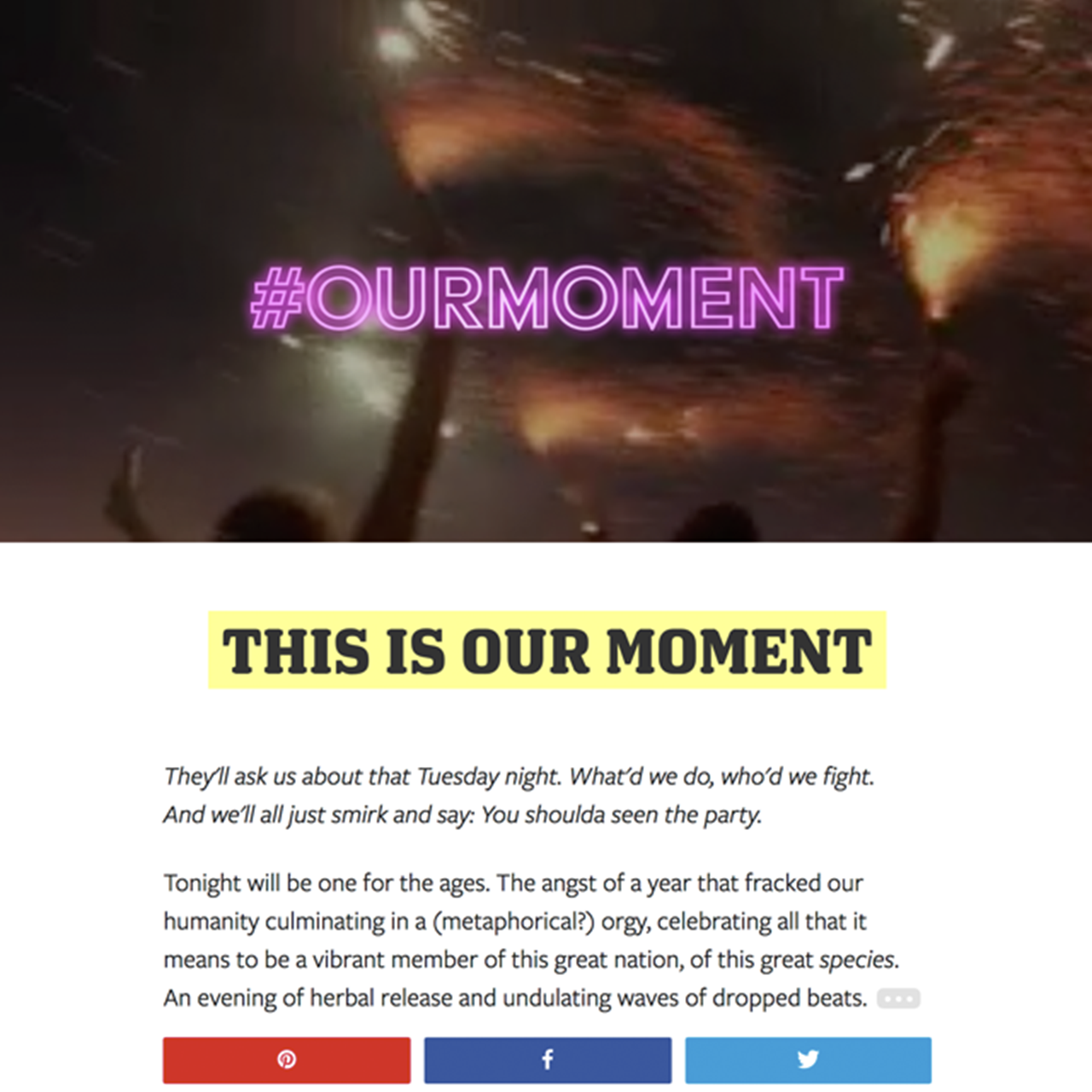

The idea was simple: Win, then party. Remind young people that when Trump goes down, a lot of them will too. The catharsis of his loss will propel them to new highs and mellow vibes. You get it.

Five days and considerable cannabis later millennial.website1 was born: a blatant grab for a few youthful heartstrings, in the hopes that one might have a large enough Instagram following to propel it to viral greatness and save America and maybe I’d wind up karaoking Kanye with Taylor Swift.

Narrator: He did not.

The hard stop for launch was the morning before the election, when the winds told me there’d be a wave to catch. The site was done. The copy, whatever. Friends who get big bucks as social media consultants gave it the thumbs up — then again, they are also nice. One last joint, a prayer to the gods —

And then:

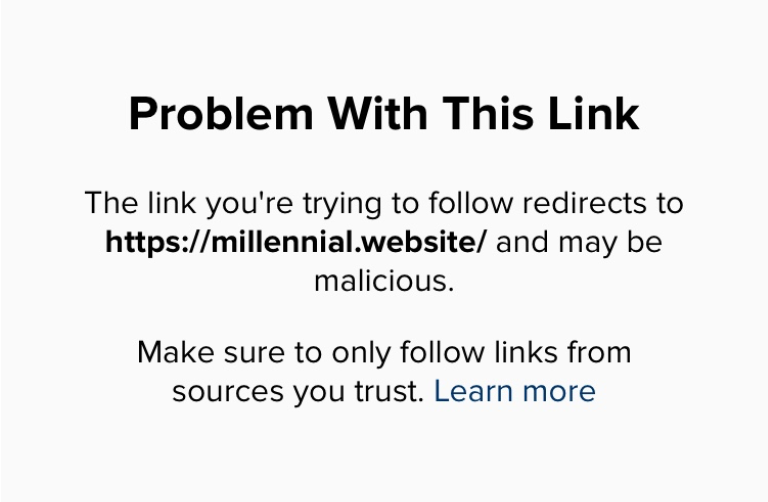

This is bad. Worse than you think, because of the structural sociopathy baked into Facebook Support — more on that later. All attempts to share the site on Facebook and Instagram are being met with alarming security errors; it’s DOA. Evasive maneuvers ensue.

A direct message to a VP at Instagram gets an immediate response (“Hey I'm in London at an event”). Fair enough, seeing as we have not spoken in five years. Other backchannels prove similarly rusty. There is promise, but there is also bureaucracy.

Hours pass. Morning fades to afternoon, the workday ends on the east coast. The wave I anticipated is really a tsunami; I probably would’ve been crushed anyway. But it is going, going, gone.

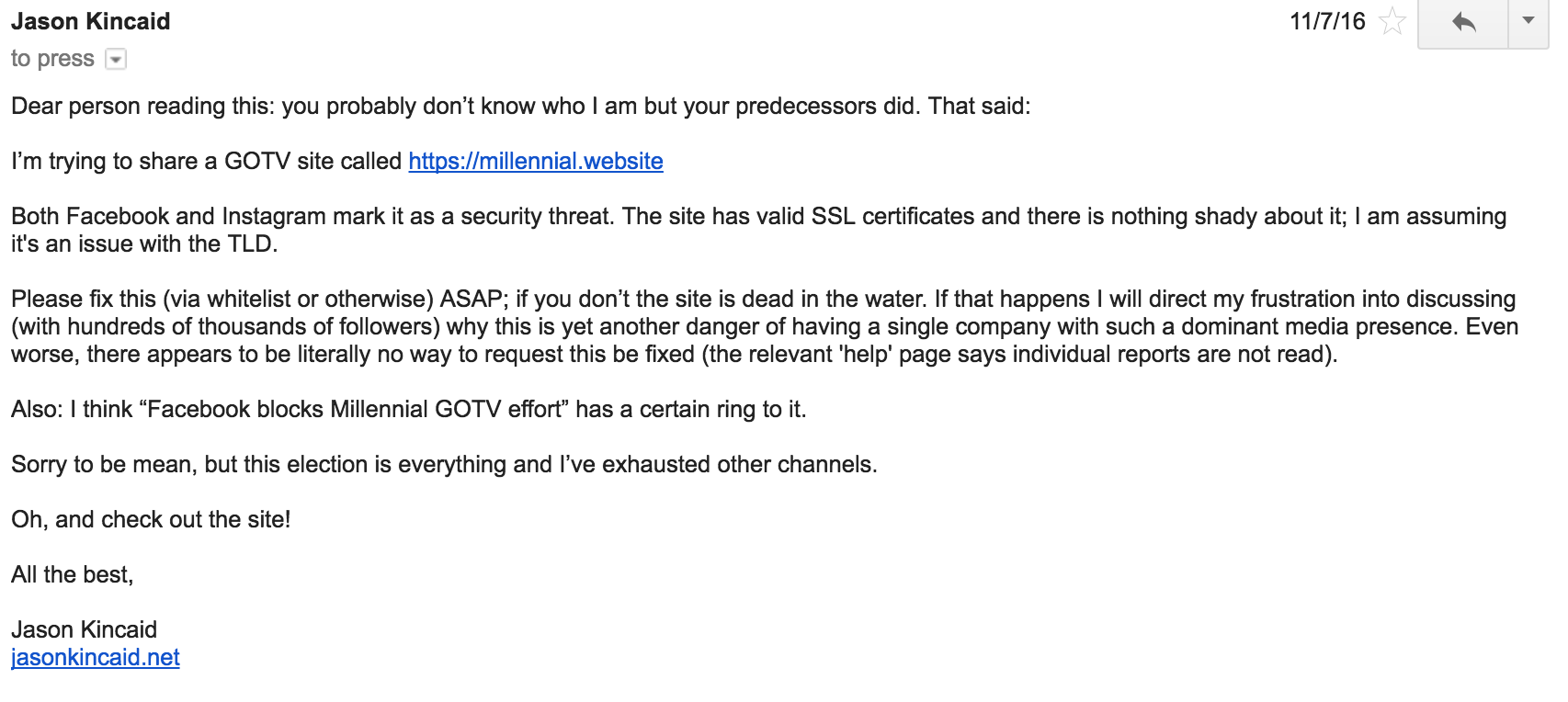

But fuck that — that damned blue box is roaring — so I revert to a previous version of myself and write a threatening email to Facebook PR.

Two hours later the site is no longer considered a security threat; A D.C.-based Facebook PR rep checks in to confirm as much.

Alas: it is already 10 PM on the east coast. In the meantime, attempts to share the site on other platforms have gone nowhere, and I need a change of pants.

Part II: Freedom of Screech

It is here that I’ll make a concession few would: the fact that millennial.website was blocked on November 7, 2016 is obnoxious, but understandable.

The explanation is simple. The ‘.website’ on which you currently stand is a relative newcomer to the domain markets, making its debut in 2014. Spammers have abused these domains — an op-ed last summer bemoans .xyz, .website, and the infamous .museum as hotbeds for nefarious actors — and Occam’s Razor suggests that Facebook’s vaunted algorithms learned to treat them with a heavy hand.

(Of course, Facebook doesn’t tell you anything when it blocks you, so this is neccesarily conjecture.)

It’s important to recognize this point, because it is the sort of logic that Facebook employees will immediately find refuge in. After all, Facebook is dealing with immense volumes of content, much of it shared by sophisticated bad actors; the amount of vitriol that Facebook successfully blocks is surely staggering. There will inevitably be false positives, and alas, this was one of them.

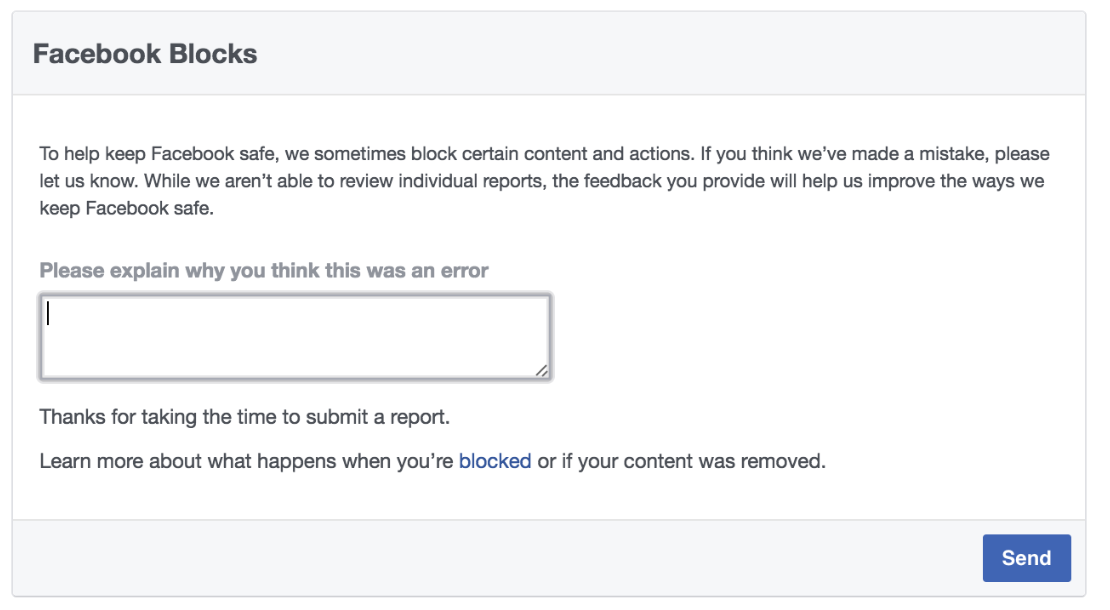

So: point conceded. Algorithms are going to screw up — they are coded by humans, after all. But given that these automated errors are a given, so too should be the mechanisms to remedy them. You know, like being able to tell someone when the robots go off the rails.

Now we should fast forward to the present, and remember how deeply Mark Zuckerberg and Sheryl Sandberg believe in free speech. It is the bedrock from which Facebook justifies its laissez faire approach to the content it distributes — and the reason why it minimizes its own culpability in the epidemic of misinformation. “When you cut off speech for one person, you cut off speech for all people,” says Sandberg. “Freedom means you don’t have to ask for permission first,” says Zuckerberg.

Which is a good thing, because as of Election Day 2016 there was no way to ask for permission. Here’s the inscription on the brick wall I ran into:

Translation: go zuck yourself.

This sort of chilled, cloying copy is a Silicon Valley speciality. It is also in obvious and complete contrast to the enlightened narrative Zuckerberg and Sandberg have adopted as their shield in the ongoing discussion about Facebook’s role in policing content. If either of them believed in freedom of expression to the extent they claim, they’d have safeguards to ensure people aren’t arbitrarily silenced — which certainly entails “reviewing individual reports”.

Doing so would doubtless entail considerable resources (like hiring people to review reports in a timely manner), but Facebook is minting billions in profit every quarter. They have the means, but their convictions are second-hand. Better luck next time.

(There are myriad other examples of Facebook’s hypocracy when it comes to censorship policies. Many of these are shaded by laws and norms, but this is more binary. Either you give people a way to appeal what your mindless, buggy software does, or you don’t.)

It is also imperative to recognize that for the vast majority of people who are unjustly smothered, there are no backchannels.

Part III: The Gears of Denial

As Mark Zuckerberg stood before Congress —

Oh, right. He’s a coward.

As Facebook’s sleek lawyer stood before Congress this week to provide focus-grouped answers to questions about Russia, he pointed to the many ways they have already rectified the situation. Political ad transparency is going way up, staffers are being hired by the thousands, and it will make whatever changes necessary to ensure this never happens again.

Because Facebook doesn’t really care about political ads. They’re nice to have, sure — politics validate the company's global influence and are predictable boons to engagement. But the combined sum of Donald Trump and Hillary Clinton's campaigns totaled $81 million. Last quarter alone, Facebook pulled in $10.3 billion in revenue. This is all a drop in the bucket.

Facebook just wants this nightmare to be over so we go back to riding the stock price and Zuckerberg’s dopey visions. Just tell it what to do and move on.

We must not let this happen. The political ads are not the problem — they are merely symptoms of it. Thankfully, the media is increasingly focusing on the truth: that the root of Facebook’s darkness lies far deeper. That the real threat lies in 'organic' content; the sort that Facebook rewards and syndicates to the masses for free. That the game Facebook calls ‘social’ is in fact an exercise in siloing and amplifying our lesser angels, to the detriment of society and our own psyches.

Facebook does all this to keep us hooked. And when we’re hooked, it can show us ads. Billions and billions of dollars worth.

If Congress wants to make a dent, it needs to scrutinize Facebook’s business model, and the way that business model guides Mark Zuckerberg’s decisions. It needs to do this because Facebook has proven itself either unable or unwilling to recognize how its actions are warped by its profit motive — and because this tension is only going to get worse.

A telling case study can be found in how Facebook handled the Trending Topics debacle. 2

In early 2016 a thinly-sourced article in Gizmodo reported that Trending Topics were being selected and edited by liberal-leaning humans — not the algorithms that Facebook had previously claimed to employ. Facebook freaked, begged conservatives for forgiveness, fired the humans, switched to its vaunted algorithms, and those algorithms promptly distributed obviously-fake stories to millions of people. Oops.

Tough calls are tough calls, and sometimes there are no good solutions. This was not one of those times. Facebook’s choice between “humans” and “algorithms” — as characterized by the press — was a false one. Trending Topics only debuted in 2014. They are hardly core to Facebook’s mission, and the company could’ve easily swapped the product for a widget that occupied the same space not-so-long ago. It’s not as if headline news is tough to come by.

But Facebook had other concerns.

In April 2016 The Information reported that “original broadcast sharing” on News Feed had declined by double-digits over the preceding year. This anodyne term belies its profound importance: it is Facebook’s bread-and-butter, comprising the baby photos, humblebrags, and emotional missives that define the company. It is our friends that keep us stuck to Zuck; when they leave, so do we — which puts Facebook’s immensely lucrative advertising business at risk.

Put another way: Facebook’s decline in original sharing is akin to an all-star athlete suffering chest pains.

As such, these vital signs — collectively referred to as “engagement” — shape the lens through which Mark Zuckerberg surveys his business. Any change that hurts engagement is unlikely to be seen as a priority, any new product is evaluated first-and-foremost by its social fecundity. This is not a recipe for objective decision making, but it is at least a predictable one.

Trending Topics were introduced because they are fountains of engagement: a personalized tabloid that guarantees you always have something new to get excited or furious about (easily shared, of course) whenever you visit Facebook. And so it was no surprise that Facebook wasted little time in implementing a new, algorithmically-edited version of the feature in lieu of humans — only to immediately screw the pooch.

“Two days after dismissing the editors, a fake news story about Megyn Kelly being fired by Fox News made the Trending list. Next, a 9/11 conspiracy theory trended. At least five fake stories were promoted by Facebook’s Trending algorithm during a recent three-week period analyzed by the Washington Post.

After that, the 2008 conspiracy post [“Hacked Obama Email Reveals He & Bush Rigged 2008 Election”] trended.” — Buzzfeed

Soon thereafter, Facebook VP Fidji Simo would explain that they switched to algorithms to facilitate scaling the product globally, while conceding the feature “isn’t as good as we want it to be right now.” In the same interview, Simo says that Facebook had seen success letting people flag stories on News Feed as ‘misleading’ — but that this functionality was not yet included as part of the new Trending Topics. Remember: this was in the months and weeks immediately preceding the election, when the stakes couldn't have been higher.

The question is: why?

Why not shelve the feature until it’s baked? Why the rush to deploy it globally, where — as Buzzfeed put it — “failures will potentially occur at a scale unheard of in the history of human communication”? Why are they in such a hurry to do things they have to immediately apologize for?

The answer is almost too mundane to warrant mention: it’s just business. If it juices engagement, it stays. Facebook’s PR team conjures a narrative about keeping people informed or connected or whatever, and we are left to deal with the collateral damage.

But Mark Zuckerberg doesn’t see it that way. To him, the tension between Facebook’s ideals and business objectives is itself a myth. From a recent sit-down with Bloomberg:

Throughout the interview, he seems irritated that his actions could be viewed as anything other than expansive benevolence.

“We’re in a pretty unique position, and we want to do the most good we can,” he says of Facebook. “There’s this myth in the world that business interests are not aligned with people’s interests. And I think more of the time than people want to admit, that’s not true. I think that they are pretty aligned3. ”

We should take him at his word — and run for the hills.

Part IV: Virtually Zucked

Facebook is sort of screwed. I’m not talking about Russia, or Trending Topics, or even Mark Zuckerberg’s naive delusions. I mean: if you resurrected Steve Jobs and set him in the pilot seat, there’s a decent chance he’d throw his hands up and say, “burn it down.”

Because it doesn’t have anywhere to go. Zuckerberg’s grand new vision is to turn Facebook into a garden of vibrant online communities — and there’s something to that. Our society is indeed wanting for better social structures and more excuses to come together. The problem is that neither Zuckerberg nor the company he leads has a knack for the kind of fundamental reinvention this entails, to say nothing of its abysmal ethical track record.

Many of Facebook’s biggest products have been total flops (Paper, Home, and the laughably overhyped Messenger Bots come to mind). And the Groups initiative sounds more desperate than prescient: the team was “mostly ignored” until Zuckerberg’s epiphany came in the aftermath of the election. Already, employees are concerned:

“He was promoting Facebook Groups, a product that millions of people on Facebook used to talk about shared interests, debate, discuss and maybe debate some more.

This type of activity, he believed, was one of the keys to his sprawling company’s future. The goal of Facebook, he told his audience, which included many Groups leaders, was to “give people the power to build community and bring the world closer together.”

Inside Mr. Zuckerberg’s company, however, there was already growing concern among employees that some of that content was having the opposite effect.” — The New York Times

In the mean time Facebook is still utterly reliant on the algorithmic slot machine that is News Feed — which is what got us here. Facebook’s business model revolves around displaying content so titillating that it is addictive in every sense of the word, and this translates to affirming our pre-existing beliefs (which foments polarization) and sending us careening on emotional rollercoasters, plus some baby photos. What’s worse: the algorithms that steer these dynamics are entirely opaque with zero accountability.4

And then there is Virtual Reality.

VR remains so underwhelming that it is hard to view with concern — aside from its tendency to induce nausea — and plenty of tech luminaries are quick to dismiss the dystopian futures portended by decades of science fiction. But VR is Mark Zuckerberg’s next big bet, which is reason enough to worry. Early next year the company will begin selling a standalone headset for $200; a fraction of the competition, and a price designed to bring VR to a scale that Wall Street cares about.

Any technology has its downsides, and VR is especially precarious. It will likely be the most addictive technology ever built, with immense potential for abuse. And once again, Zuckerberg is utterly unprepared. During his keynote presentation at this year's Game Developers Conference, MMO pioneer Raph Koster shared an alarming exchange he had with Facebook's CEO.

Koster: "What do you think are the social and ethical implications of social virtual reality and connected augmented reality?

Zuckerberg: "What ethical implications?"

You don’t have to envision a world like The Matrix or Ready Player One to see how this can spiral into darkness5. Consider a more mundane scenario, a few years out, as it becomes clear that Facebook has lost its momentum and is struggling to repeat its success in the developing world.

What happens when Facebook is growing desperate to offset a declining News Feed, and comes to see the hundred million people jacked into its headsets as a resource to be tapped? Is there reason to believe it will show restraint in the abundance of advertising it immerses us in? Will it facilitate the exploitation of the most heavily addicted — as it did in the glory days of FarmVille — by inducing them to spend endless hours wandering its virtual hallways? Are we sure we want to give such a high-fidelity link to our neocortexes to a man who only needed two dimensions to undermine democracy?

And finally: what checks are there against Mark Zuckerberg’s infamous “ruthless survival instinct”?

That is the scariest thing about all this. We have yet to see Mark Zuckerberg when his company is truly in trouble. Facebook has never had a viable competitor; only maybe-somedays, and those days are over. This week’s Congressional testimony represents its gravest threat ever, and Zuckerberg did not even bother to show up.

Facebook has hurt us simply by winning. What happens when it starts to lose?

Part V: Epilogue

An essay like this one is about as effective as chimps flinging poo at their zoo handlers — and similarly rewarding. But do not mistake it for progress. Real change means forcing Facebook to evolve beyond PR-driven band-aids. It means giving researchers, lawmakers, and citizens a chance — to understand how their minds are being shaped by these algorithms, and to have a say in those dynamics. It means a fundamental change in Facebook's internal culture, which is hard to imagine under its current leadership.

But that stuff's hard. So why not buy a vintage soft T-Shirt?

Oh, and that GOTV thing? Maybe it worked a little bit.

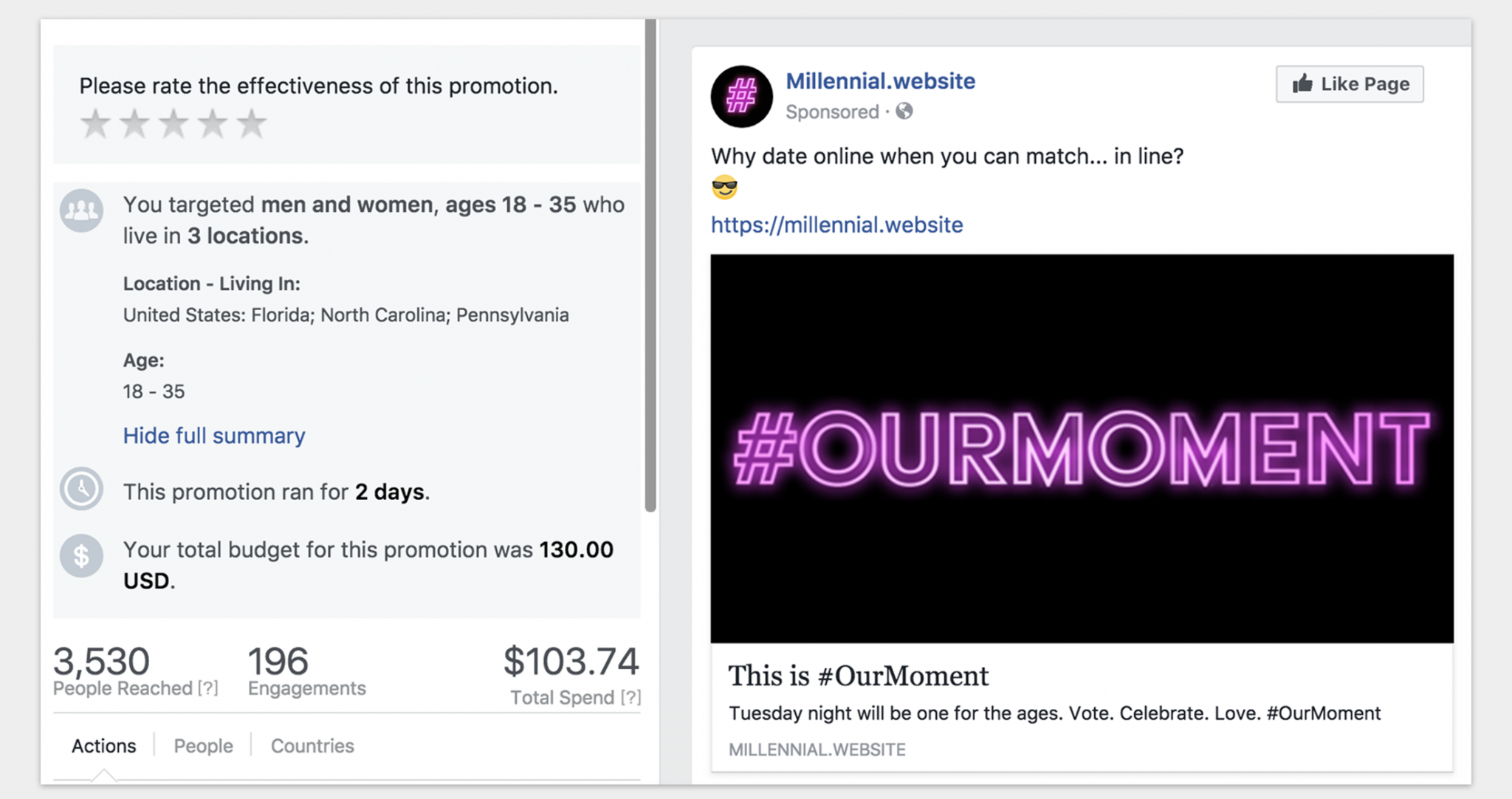

Turns out Facebook ads work pretty well when they don't perceive you as a security threat (there's a reason Facebook rakes in so much money). In fact, they worked well enough to wake the trolls — who tried resetting my Facebook password twenty times in one hour on election day. Funny what gets by those security algorithms.

All told the ads reached around 12,350 people in Pennsylvania, Florida, North Carolina, and Nevada.

Further Reading

Time Well Spent — This non-profit movement, led by former Google ethicist Tristan Harris, is pushing the technology industry toward practices and business models that are not in constant battle with the wellbeing of society and our mental health. Eventually Apple will realize that it is uniquely positioned to capitalize on this (it sells hardware, not our minds), and other firms will be forced to compete on their products’ humanity, not just megapixels. 6 The key is to force these firms to actually change, not just come up with aspirational TV commercials.

Professor Zeynep Tufekci — A sociologist who is beyond tech-savvy; she can explain these algorithms and their pitfalls better than the people building them. Watch this TED talk, follow her on Twitter, and read this exchange she had with a Facebook executive on the dynamics of News Feed.

The Attention Merchants / Tim Wu — Wu is a law professor at Columbia; his book charts the rise of modern advertising (which is not as old as you think it is, and has unnerving roots in war propaganda) and the dynamics of mass media up to the present day. Don’t miss his academic paper on how a new approach to anti-trust could be applied to companies like Facebook. Also see Wu’s advice to Facebook this week in the New York Times, where he encourages Facebook to become a Public Benefit Corporation.

You Are the Product — John Lanchester for The London Review of Books. A sweeping takedown of Facebook, more sharply written than any tech reporter could ever muster (because they have to stay on speaking terms with Facebook PR).

Jason Kincaid covered Facebook as a senior writer at TechCrunch from 2008-12. He coauthored a book about the firm, wrote the first article detailing the algorithms behind Facebook News Feed, and was once punked by Facebook’s engineers. He played himself on HBO’s Silicon Valley and is currently dining on quail.

Published November 3, 2017- This link goes to usa.millennial.website, which is a clone of this site as it stood on Election Day 2016. ↩

- The feature is neither innovative nor impressive: just a handful newsy links that people are inclined to click on, no matter how dull their friends are.It resides in some of the most valuable real estate on the internet — every Facebook homepage — so everyone sees it. ↩

- I am reminded of a passage from Kurt Vonnegut’s Mother Night (condensed for clarity).“I have never seen a more sublime demonstration of the totalitarian mind, a mind which might be likened unto a system of gears whose teeth have been filed off at random. The dismaying thing about the classic totalitarian mind is that any given gear, though mutilated, will have at its circumference unbroken sequences of teeth that are immaculately maintained, that are exquisitely machined. The missing teeth, of course, are simple, obvious truths, truths available and comprehensible even to ten-year-olds, in most cases. The willful filing off of gear teeth, the willful doing without certain obvious pieces of information... that is the closest I can come to explaining the legions, the nations of lunatics I’ve seen in my time.”

- Even this corrosive recipe is losing its potency, as evidenced by the aforementioned decline in original sharing. Facebook is now testing a bifurcated News Feed that places renewed emphasis on the content shared by our friends, rather than the media organizations we’ve subscribed to. That sounds promising at first blush, but it may compound Facebook’s silo effect, further stratifying society into groups of people who agree with each other.The fact that Facebook is testing this system at all — after spending years courting those very media organizations, who are now terrified — is indicative of the gravity of the situation. Again: it is our friends that keep us glued to Facebook. Those chest pains must be flaring. ↩

- But maybe you should: the legendary programmer John Carmack, who is CTO of Facebook’s VR company Oculus, is on record saying, "We probably are heading more on the path of The Matrix than we are to Mars." ↩

- Prediction: Andy Rubin’s company Essential will try to do this for Android. Ed. update: or not!

↩